Ethical Implications of Artificial Intelligence

The application of AI in day-to-day tasks has raised ethical concerns across employment, privacy, and surveillance, along with fears of social bias and responsibility. Leavy (2023) quotes Chamorro-Premuzic: “Machines performing cognitive tasks capable of doing what humans do threatens to erode human uniqueness. Where does that leave the human touch? In Creativity and Empathy, the answer must be reclaiming the center stage” (p. 39).

Chen and Tsai (2021) examine the identity and self implications of AI, questioning—if AI can emulate emotion and language with such realism, does it have an identity? If so, what differentiates it from human beings? These questions stress the need to safeguard the narratival dimension of human consciousness that AI cannot replicate.

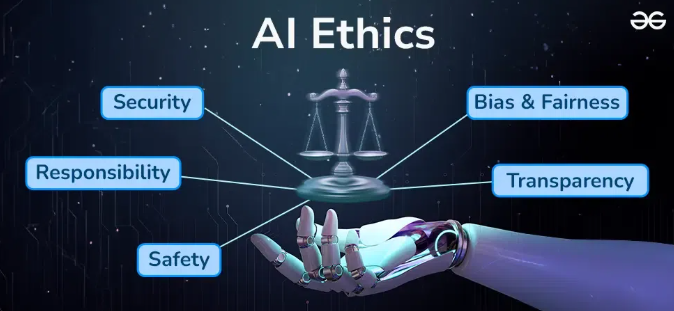

Responsible use of AI requires regulation and oversight. Many systems are “black boxes,” with complex algorithms that are difficult to interpret. Without transparency, users cannot challenge harmful decisions. Wang et al. (2021) state that “AI [in educational settings]… should complement, rather than replace, human teachers…” (p. 124) due to the importance of emotional and relationship-based learning.

AI in the public sector—such as predictive

policing, facial recognition, and welfare fraud detection—often lacks

adequate supervision, increasing the risk of bias and discrimination (Dwivedi

et al., 2024). On a global scale, countries differ in approaches to

surveillance, data ownership, and AI ethics. Although UNESCO and OECD

are developing policies, current frameworks lack consistency in

application (Gama & Magistretti, 2025).

AI in the public sector—such as predictive

policing, facial recognition, and welfare fraud detection—often lacks

adequate supervision, increasing the risk of bias and discrimination (Dwivedi

et al., 2024). On a global scale, countries differ in approaches to

surveillance, data ownership, and AI ethics. Although UNESCO and OECD

are developing policies, current frameworks lack consistency in

application (Gama & Magistretti, 2025).

Can we trust AI ?Click below for more information